- Install apache spark jupyter install#

- Install apache spark jupyter code#

- Install apache spark jupyter download#

- Install apache spark jupyter windows#

Walla! and don’t forget to refresh the spark kernel inside your notebook to apply changes in the configuration files. Add it, and/or any other package you’d like Spark to import whenever you are going to use Jupyter. Obviously, if you wanted to import a jar (to use its classes/objects) inside your notebook, the green part is just for that. “argv”: [“/usr/local/share/jupyter/kernels/apache_toree_scala/bin/run.sh”, “PYTHONPATH”: “/usr/lib/spark/python:/usr/lib/spark/python/lib/”, Navigate to this path, you should be able to find a directory named apache_toree_scala, in which you’ll find the kernel.json file, that looks similar to: To do so, you have to edit your Jupyter’s Spark-Kernel configuration files. By defaul, Jupyter kernerls are installed inside /usr/local/share/jupyter/kernels. You might want to include spark packages as Databrick’s CSV reader or MLLib. Unless you do so, you’ll see err 404 – page not found.

Install apache spark jupyter windows#

Note: If you haven’t already done that – you’ll have to tunnel your SSH connection to your master node ( windows guide, mac/linux guide). Copy the token parameter value, open your local web-browser, surf to your master node address on port 8192, and enter the token on the login screen. To get it, SSH to the master node where Jupyter is running, and call: UPDATE: on the newer versions of Jupyter a user must have an access to the service’s token. Tada! Jupyter service is already running!

Sudo curl | sudo tee /etc//bintray-sbt-rpm.repo

Install apache spark jupyter install#

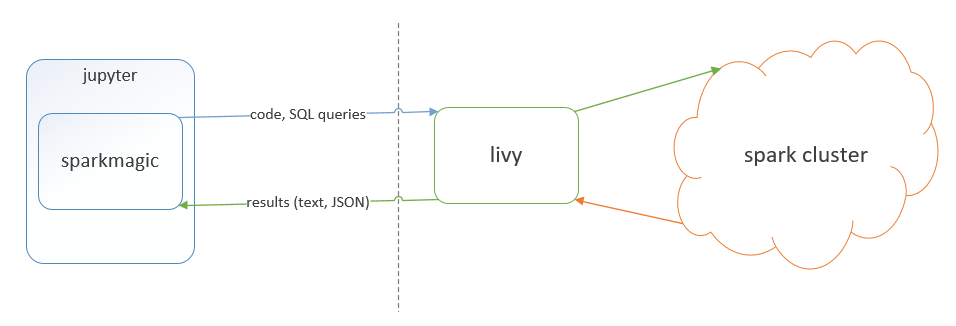

Sudo /usr/local/bin/jupyter toree install –spark_home=/usr/lib/spark To install it, execute the following on the master node (no need to run on all nodes assuming EMR 4.x.x, on previous versions paths are different): Jupyter’s Spark Kernel is now part of IBM’s Toree Incubator. It is a seamless binding to run your notebook snippets on your Spark cluster.

Install apache spark jupyter download#

On previous EMR versions (AMI 3.x or earlier): download the bootstrap script from JustGiving‘s github, save it on S3, and add it as a bootstrap action in your cluster. S3://elasticmapreduce.bootstrapactions/ipython-notebook/install-ipython-notebook To have Jupyter running on your AWS cluster ( EMR 4.x.x versions) add the following bootstrap action: Although, the original Jupyter installation comes only with a python kernel out-of-the-box, and so, the installation is two step: Installing Jupyter

Install apache spark jupyter code#

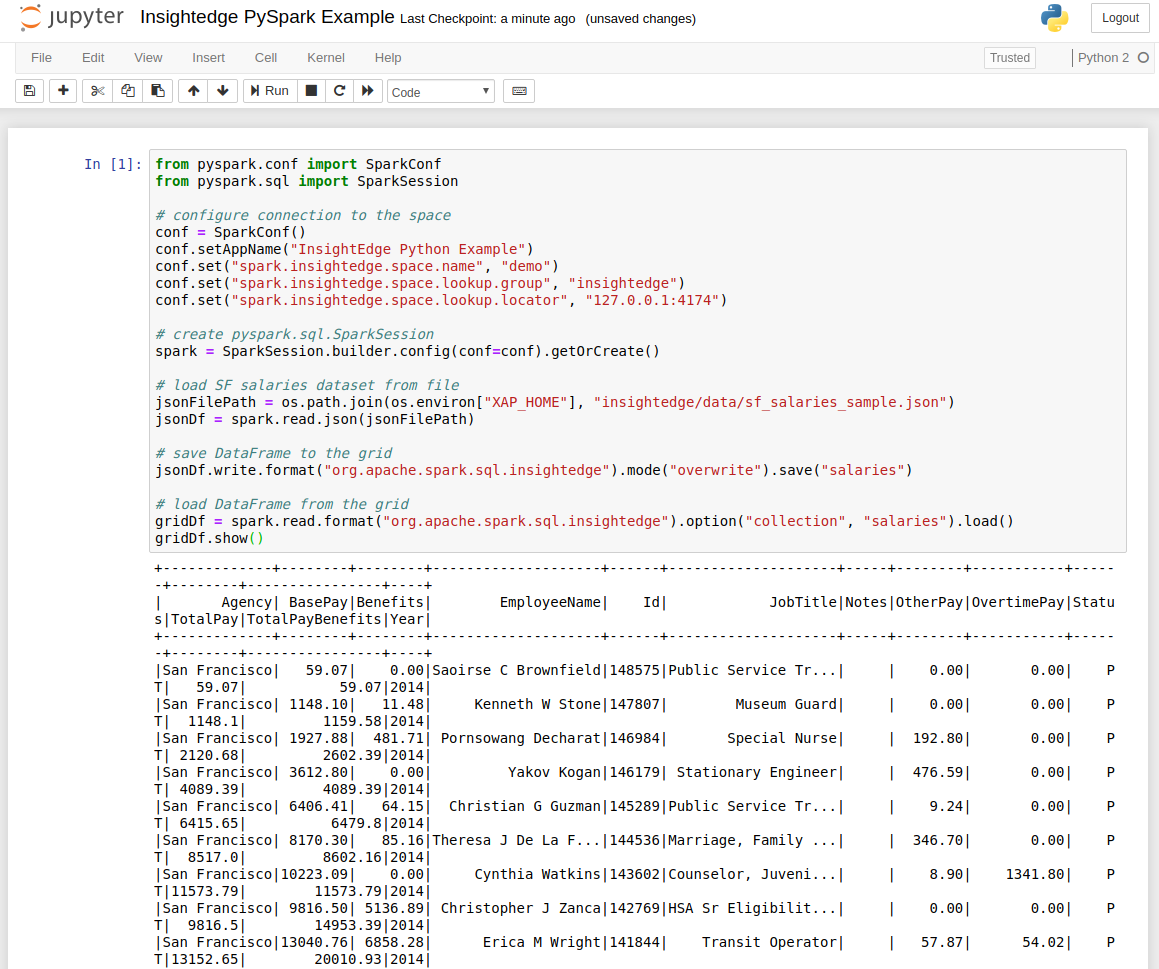

Installing the Jupyter notebook exposes a web service that is accessible from your web browser and enables you to write code inside your web browser, then hit CTRL+Enter and your snippets are executed on your cluster without leaving the notebook.

Of course, you can generate a “wiki” page for your project, but what would really be cool is if you could embed some code inside it, and execute it on demand to get the results, seamlessly. Sometimes, it is the case when you would like to add equations, images, complex text formats and more. In-code comments are not always sufficient if you want to maintain a good documentation of your code.

0 kommentar(er)

0 kommentar(er)